A detailed execution plan for building products with customers (part 2)

Talking to customers and collecting feedback + Iterating based on feedback and insights

In Part 1, we explored an effective way to find customers to build with and how to make sure we get to talk to them. Whether that is over a call, email, or via Loom.

As a quick recap, in-app surveys have worked quite well for us. They are non-intrusive, easy to fill out, and they get customers in the right mindset to ask them for a chat. When reaching out, make sure you provide alternatives to a call. Looms are a great way to receive feedback and see live interactions with prototypes. And you don't have to block time on someone’s busy schedule.

In Part 2, we explore the talking you get to do when on a call with a customer. How to register, analyze and use feedback to iterate on designs and prototypes.

This is a two-part series about a detailed plan to execute on the premise of building with customers.

Part 1: Finding customers willing to speak to you + Scheduling calls with customers (and alternatives) - Read part 1 here

Part 2: Talking to customers and collecting feedback + Iterating based on feedback and insights <- This article

Alright. We have customers we can talk to. We have reached out to get more feedback. Some have scheduled a call with us, some want to do it over email, and a few sent a Loom. Let’s go and talk to them!

1) Talking to customers and collecting feedback

Conversations about a feature, a problem, or a prototype can happen in very different ways. They can be in the form of an email thread, a virtual video call, or even a Loom. What matters is getting valuable information, opinions, and reactions from your customers. You get to understand their problems, their incentives, and their goals. Make sure this gets documented.

Over email

It is tricky, I won’t lie. It will require some back and forth but it can be done! What I usually do when testing a specific solution to a problem is:

I send them the prototype links along with some tips on how to use them;

I let them write back whatever they want. If there are misunderstandings, I try to check if the prototypes are not clear enough. Or if the customer misunderstood something.

I follow up with questions about their feedback. I try to understand what they did with the prototype and if they found any value in it. The most important thing is to know if they understood what the prototype did and if it solves a problem they have in an easy way.

Doing these interviews over email requires some extra effort as misunderstandings are common. Yet, it helps to make sure you write clearly and make use of screenshots or videos to show what you mean. It’s possible. And it’s better than no feedback at all.

Over a video call

This is the preferred way to do it as it’s much easier and scalable if you keep them down to 15-20min. However, you can’t interview enough people to get representative data. Instead, you should interview for variation as Teresa Torres suggests.

The agenda and interview flow we use are the following:

Quick intros + background about the problem we want to solve and why.

Explain to the customer why we contacted them. We use their participation in the in-app survey as the main reason - see Part 1 here for context.

Ask the customer to share their screen and share the prototype link in the chat. This usually causes some technical friction. I recommend making sure you host the call on the tool the person is most familiar with. We use Google Meets but a lot of our customers use Zoom or Microsoft Teams, for example.

Explain the user scenario and ask them how they would go about it. Then, let them do it on their own. This usually sounds something like “Imagine you are person A who wants to do X. How would you go about doing it?”

Observe what they do, and how quickly they do it. And listen to what they say/don’t say.

Time for some in-depth questions and discussion.

Seeing the customer going through the flow sparks immediately a lot of curiosity. Here are some of our favorite questions to explore:

“Did you understand what you did during the flow?” - if so, ask them to explain it to you to make sure it was clear and that you are aligned.

“What problem would this solve for you?” - if it’s not clear to them, it’s likely they don’t have the problem. But, if they nail the answer, you know you’re onto something.

“We saw you clicked on X, why did you choose that instead of Y?” - we use this one when there are choices to make during the flow (e.g. 2 buttons with different CTAs). It’s a question that helps to understand how they were thinking while using the prototype.

“Was there anything that felt unnatural during the flow?” - is a helpful question to remove our biases as we tend to take some things for granted (we know the product too well).

“Was there anything you felt it was missing?” - we love this one as it tells us if we missed any important bits we would only know if we were a customer. Still, be mindful of the must-haves and the nice-to-haves. Being missing does not mean it’s critical to solve the problem.

“Were there any surprises (good and bad) while you were interacting with it?” - also a very interesting one to test how well received it would be by the customer base. It overlaps a little with “Was there anything that felt unnatural during the flow?”.

We ask open questions and let the customer speak as much as possible. Some people will talk by themselves which is awesome. Others will need you to make them feel comfortable first. They will ask a bit more from you when interacting and asking questions.

You can repeat this interview agenda from step 4 onwards with alternative flows and with other prototypes you are looking to test. We usually test 2 flows per prototype and 2 prototypes per call. This helps keep the call short.

Finally, one of the main advantages of a video call is that you get to see their face. Use this to read the person you’re interviewing. You should be able to infer how credible each answer is based on their facial expressions. Here are a few things you can look out for and others to be aware of:

Weird faces as in “What am I supposed to do here?” will most likely state confusion which you should explore further and clear out. Don’t ignore this if you see it. Write down your observation and later ask why they seemed confused.

Surprising faces as in “Oh, wow, this is really good!” is exactly what you are looking for. Consider this the product-market fit for a prototype. You know it’s valuable without having to ask.

Short and assertive “Yeses”. When people reply “Yes” without explaining their answer, I usually doubt it. Especially if done in agreement. To not cause conflict or disappoint us. Most people are very nice. And they will say “Yes, this would be valuable.” because they want to be polite. Other times it will be because they don’t understand it and don’t feel comfortable asking you to explain it. This is dangerous. You should always explore the short and dry “Yeses”. Most often than not, you will realize they don’t have the problem you are trying to solve.

Via Loom

Using Looms for customer interviews is like being the cool kid who also happens to be smart. While a modern way to go about it, Looms can be very effective at delivering feedback from customers in a more comfortable way.

In contrast to calls, on a Loom, people are free to say and do whatever they want, when they want, at their own pace. It’s raw and uninterrupted feedback. It’s stripped of any biases you may introduce with the way you ask questions. It does not get interrupted by clarifications or follow-up questions. It’s recorded so you can watch it later and share it with others. And the cherry on the cake is that it’s done asynchronously. Time is not blocked on your or the customer’s calendar.

When it comes to the interaction, I usually comment directly on the loom. The fact I can comment at a specific moment of the video helps to be specific and point out something they did or said. To make sure they see it, I also email them the responses or record a loom myself exploring their feedback.

Some people enjoy discovering new tools and interacting with them. And it’s fun being able to exchange looms with customers and documenting the process in video.

Ok. Now that we have talked to customers, what do we do with all the information we got?

2) Iterating based on feedback and insights

One of the benefits of having a colleague on a call is the fact one can take notes while the other asks questions. This way we make sure we don’t miss any information. We do this during calls and then combine our notes together in a spreadsheet. We know who said what, when, and about which feature/problem we were investigating. At the end of each call, we also sync with each other on Slack. We share impressions and make quick decisions on iterations.

When you start interviewing customers about a particular problem or a prototype, the data is scarce. You haven’t collected much yet. We usually wait before making any decisions to iterate. Yet, when feedback starts accumulating, there will be a few things that will arise. Some will be very clear, others not so much. When this happens, double-check your intuition against the data from interviews and iterate if it makes sense.

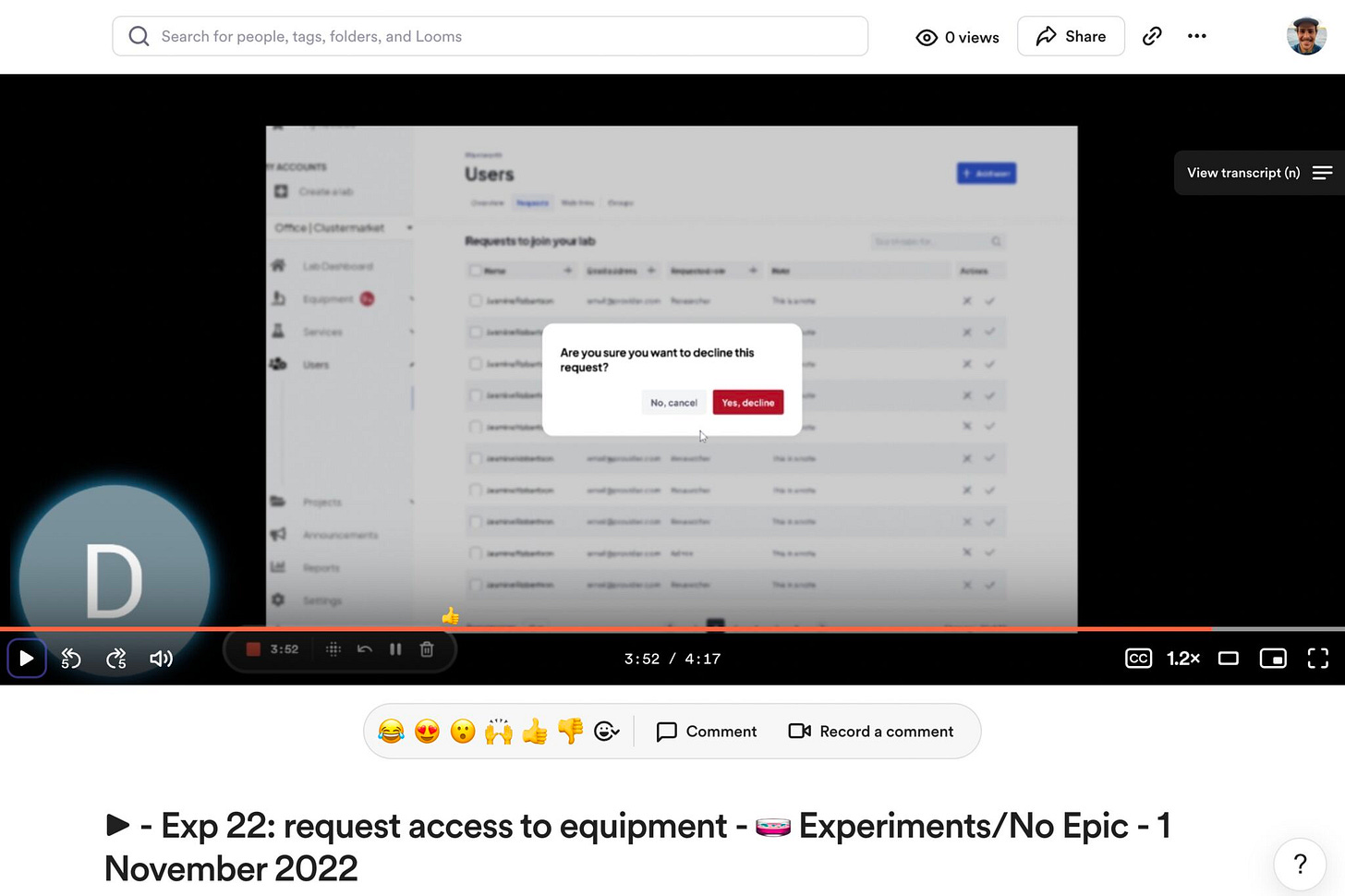

For example, during a recent testing phase, there was a flow that involved declining a request. We decided to build the prototype as simple as possible. Good enough to solve the problem. In this case, we wanted to allow an admin to decline requests with only two clicks (1-Decline; 2-Yes, I’m sure).

However, adding a written reason when declining became something everyone mentioned as missing. We checked our notes, measured how many times it was mentioned, and decided to add it. For the next calls, we had a newer version with a text field to add a reason. People started mentioning how nice it was to be able to tell why they were declining it! We felt smarter.

Another example of a feedback-driven iteration came out of confusion. We unveiled this during discussions at the end of some calls. Some of the things customers were saying did not make much sense. A clear signal something was wrong.

What was happening was that people seemed not to trust what the prototype was doing. When asked about what they would do next, everyone mentioned they would go and check if what they did really changed something.

To dig deeper into this, we explored where this lack of trust was coming from. By asking “Was there anything that felt unnatural?”, we discovered the page the user was landing on did not prove what had happened. They needed visual confirmation of the changes. A success message was not enough. They wanted to see the changes with their own eyes.

In this case, this was related to a change in user permissions. But we were not showing them the altered permissions at the end. So we changed it. The final page of the flow was now a page where the user permissions were clearly shown. And as a consequence, there was less confusion about it in the following calls.

Customer interviews are individually qualitative data. But you can get quantitative data from multiple sources when pooled together. The balance between both is key. Talking to people allows you to check for things like facial expressions which you can’t check for in a survey.

On the other hand, people are different and your interviews will be different from each other. Hence why it’s important to collect the feedback, go through it with your team, and analyze for patterns. If you do enough interviews, you will see patterns emerging. You will see some things being mentioned multiple times. Then, all you have to do is confirm it makes sense for the business and that the quantitative data supports it. If so, you act on it.

Closing notes

You want to talk to customers and collect feedback in any way possible. Be flexible, use the tools they already use, and meet them where they are. Use in-app surveys to catch them at the right time when in the right mindset (check Part 1 for how to do this).

Always ask ‘why?’ to dig deeper into people’s answers. Ask why they did what they did or thought about a feature/prototype the way they did. This is more important than the actions taken.

When interviewing enough people for variation, you’ll find yourself with diverse qualitative data. Check for quantifiable patterns, understand, and act on them. The outliers (i.e. the feedback points only 1-2 people mentioned) are important and should be analyzed for impact. If we interviewed more people, what are the chances this could also become a pattern? If high, consider it in this or in the next iteration. If low, ignore it. It’s important to be able to ignore feedback and say no, especially when the feedback is an outlier.

Finally, don’t do this alone. Do it together with your team. I’m lucky enough to work with Dan Raubenheimer who is our brilliant Product Designer. We tackle together customer interviews, analyze feedback, and iterate on designs.

Grab your designer and go explore your customer base. You will have lots of fun and end up building a better product. I promise.

This is the end of Part 2 of this two-part series about a detailed plan to execute on the premise of building with customers. You can check Part 1 here.